Measuring Metadata

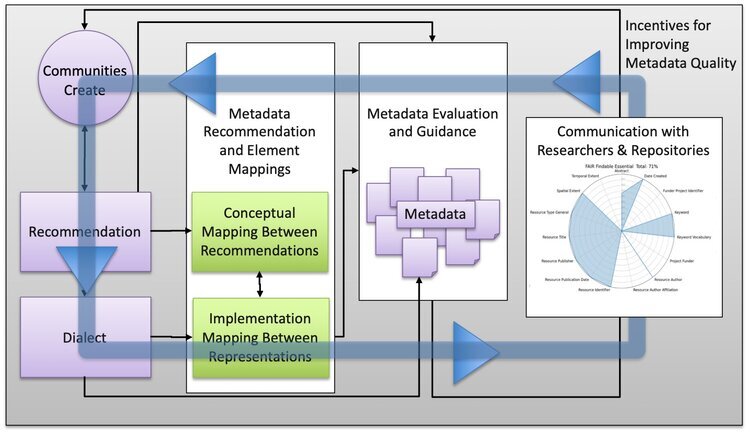

/Evaluating documentation is critical for identifying good examples within a collection, for laying out a path forward and for recording progress as the documentation is improved. In the end, whether or not users can use and trust your data is the final evaluation. There are quantitative and qualitative steps that can be used as signposts on the way to trustworthy data.

Metadata repositories are typically behind the scenes driving data discovery portals, business processes and decisions, and publishing houses. Measuring the metadata helps make improvements visible. This radar plots compares CrossRef metadata completeness during two time periods. It shows the results of a concerted effort to increase the number of ORCIDs in this publication metadata. ORCIDs are unique and persistent identifiers for people. Adding them to metadata helps those people get credit for all kinds of contributions to the research community.

Make Improvement Visible

Adding ORCIDS connects people to the PID Graph!

The tools we use for creating metadata influence the content we can create and the completeness of that content. Measuring metadata makes it possible to compare metadata before and after changes to those tools. In this case, changing a publishing platform led to a increases in metadata content across the board!

Find systemic changes

Big improvements across the board

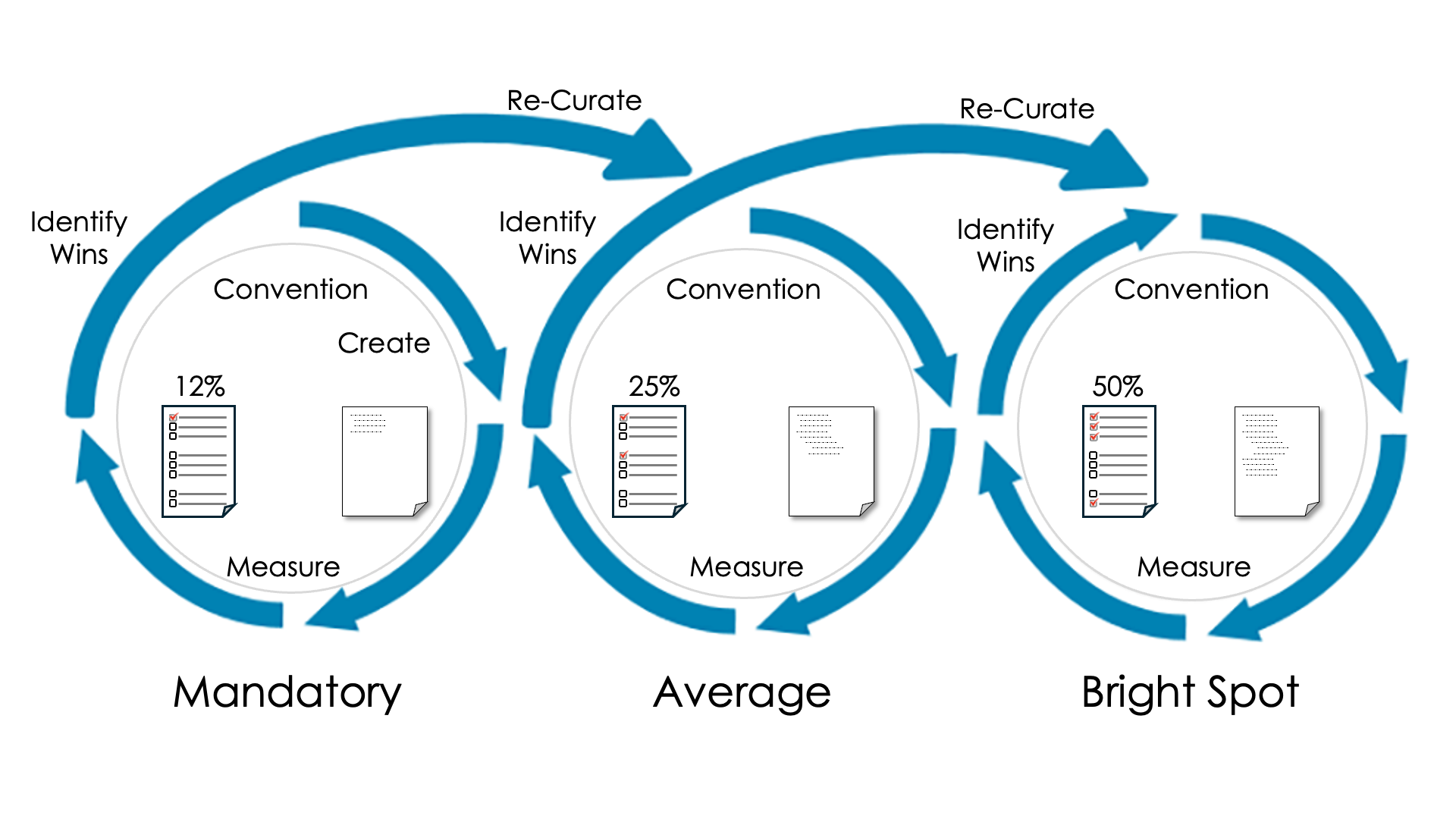

Large metadata infrastructure providers like CrossRef and DataCite provide access to metadata from many providers some of which manage many repositories. It is important to be able to find Bright Spots in those groups with great metadata that can be used as good examples for others that are starting improvement efforts. The physics journal Stichting SciPost had the most complete metadata of almost 1700 CrossRef members measured using the CrossRef Participation Reports. Great job!

Identify Bright Spots

Good work deserves recognition!

Change is hard

Metadata improvement is hard when it involves organizational change. The Heath brothers provided some great insight into organizational change in their great book Switch. This talk applies some of their insights to metadata improvement.