The Global Research Infrastructure and the NSF Public Access Repository

/Ted Habermann, Metadata Game Changers

Jamaica Jones, University of Pittsburgh

Cite this blog as Habermann, T. and Jones, J. (2024). The Global Research Infrastructure and the NSF Public Access Repository. Front Matter. https://doi.org/10.59350/s2mgr-mwt45

Understanding the impact of research funded by U.S. Federal agencies and other funders is an important, on-going task. Historically, funding information has been recorded as acknowledgements in research articles. It may include the name of the funder(s), persistent identifier(s) for the funder, and identifier(s) for the award(s). This text is converted to structured metadata by publishers, if possible, and made available, in many cases, in Crossref metadata. In some cases, structured metadata may be collected directly from the researcher through a submission system.

The INFORMATE Project (Habermann et al., 2023) addresses the question of how the growing network of connected articles, datasets, and other research objects, i.e. the global research infrastructure, might contribute to identifying funders and awards for research objects. Three sources are considered:

1. The NSF Award Database supports the NSF Award Search and is the most complete source for NSF Award information.

2. The National Science Foundation Public Access Repository (PAR) is a repository of journal articles and other research objects related to NSF awards and reported by researchers.

3. CHORUS selects and organizes metadata from the global research infrastructure and provides dashboards and reports for funders, publishers, and journals.

These sources serve different purposes and have very different data journeys (Bates et al., 2016), so differences between them are not unexpected. The NSF Awards database is maintained internally by the group that manages those awards and associated reporting. Items in PAR are entered by researchers and items in CHORUS are retrieved from Crossref based on funder metadata. Comparisons across these data sources raise questions at several levels of resolution: award and DOI. Here we focus on the more detailed resolution (DOI) and the two data sources relevant at that level: CHORUS and PAR. Specifically, here we are seeking to understand the relationships between NSF awards granted and their resulting publications in PAR.

Finding PAR DOIs

The PAR user interface is designed for interactive searching by human users using a variety of inputs. It supports simple (Figure 1) and advanced (Figure 2) searches and there are several ways to use the interface to search for award numbers. The award number can be 1) inserted into the simple search, 2) inserted as an Identifier Number in the advanced search, or 3) inserted as an Award ID in the advanced search.

Figure 1. Simple CHORUS search interface.

Figure 2. Advanced PAR search interface. Note specific input field for Award ID.

Table 1 shows the number of results (DOIs) that are returned for simple and advanced searches for several award numbers. There are many cases where the numbers differ and, in those cases, the simple search typically returns more DOIs than the advanced search. The award number with the largest difference between the two searches is 1314642 with ten PAR DOIs discovered in the simple search and only three in the advanced search. This award has 24 associated DOIs in CHORUS.

Target Award

Search

Results

Target Award

Search

Results

2038246

Simple

14

2028868

Simple

12

Advanced

11

Advanced

12

1314642

Simple

10

2120947

Simple

2

Advanced

3

Advanced

2

1805022

Simple

36

2038246

Simple

14

Advanced

35

Advanced

11

Table 2 shows all DOIs associated with award 1314642 in PAR and CHORUS with the sources where the DOIs were found. The three DOIs found in the PAR advanced search are bold and include the award ID (1314642) in the Award IDs column from PAR. The ten DOIs found in the PAR simple search include P in the source in Table 2. It appears that the advanced search for Award Id retrieves only items that include the target award number in the AWARD_IDS field of the metadata, whereas the simple search finds items that are connected to the award but do not include the award Id in the metadata.

DOI

Year

PAR Award IDs

Source

10.1038/s41598-023-28166-2

2021

10.1016/j.hal.2019.101728

2017

10.1002/lno.10530

2014

10.1021/pr5004664

2016

10.1002/lno.10664

2017

10.1111/eva.12695

2017

10.1038/s42003-021-02626-9

2018

10.1016/j.hal.2018.08.001

2018

10.1111/jpy.12386

2019

10.1093/toxsci/kfz217

2020

10.1016/j.hal.2015.05.010

2015

10.1016/j.ecss.2015.06.023

2015

10.1016/j.hal.2015.07.009

2015

10.1016/j.bbagen.2015.07.010

2015

10.1016/j.ntt.2015.04.093

2015

10.1021/acs.chemrestox.5b00003

2015

10.1016/j.hal.2015.11.003

2016

10.1016/j.neuro.2015.11.012

2016

10.1016/j.taap.2016.02.001

2016

10.1016/j.marpolbul.2016.01.057

2016

10.1093/toxsci/kfx192

2017

10.1016/j.toxicon.2018.06.067

2018

10.1093/eep/dvy005

2018

10.1016/j.hal.2018.06.007

2018

10.1073/pnas.1901080116

2019

10.1093/toxsci/kfab066

2021

Table 2 also includes twenty-four DOIs associated with the award number 1314642 from the CHORUS All Report from early 2024. DOIs found in PAR and CHORUS are marked with C&P in the source column and those found only in CHORUS are marked with a C. These results clearly indicate that many DOIs that acknowledge funding by NSF in the literature and are, therefore, in CHORUS, do not get included in PAR. How can these missed DOIs be identified across the entire dataset?

Comparing CHORUS and PAR DOIs

PAR and CHORUS have very different data journeys, so it is not surprising that they include different DOIs. The PAR search interface makes it possible to query for connections between awards and DOIs using URLs with the form: https://par.nsf.gov/search/term:[awardID]/identifier:[DOI]. If the award number and DOI are connected in PAR, this URL returns information about the DOI as formatted HTML text in the search result. If the award number and DOI are not associated, that text is missing, so the total number of bytes returned is smaller. Thus, the existence of the award-DOI connection could be tested using the response length.

We tested this hypothesis using this URL pattern with DOIs found in CHORUS for ~50,000 awards and examining response lengths. Figure 5 shows that two distinct groups emerge from these data. The largest group, which includes over 180,000 URLs, has response lengths between 225,500 and 226,000 bytes. The second group is more variable, with lengths distributed over a much larger range, 269,500 to 274,500 bytes. There is a clear gap between these two groups.

This is the behavior we would expect if the awards/DOIs in the first group are not included in PAR. They all have essentially the same response, just the framework for the page without any article information. The queries for awards and associated DOIs have much more variable lengths resulting from the HTML describing the DOI. The data show that there are substantially more DOIs in the first group, not associated with awards in PAR.

Figure 3. Response lengths for 300,000+ PAR queries for awards and DOIs.

We examined several DOIs manually to test this conclusion. Table 3 shows the results for eleven DOIs associated with award number 2038246 in CHORUS. The response lengths for these DOIs reflect the groups seen in Figure 5 with six < 226,000 and five > 269,500. This suggests that five of the eleven DOIs are connected to award 2038246 in PAR (green) and six are not (brown).

DOI

Publication

Award

Length (bytes)

10.5194/esd-2021-70

2022

10.5194/acp-2022-372

2022

10.5194/acp-23-687-2023

2023

10.1002/essoar.10509627.1 (preprint)

2021

10.5194/egusphere-2023-117

2023

10.1088/2515-7620/acf441

2023

10.1029/2023gl104417

2023

10.1029/2023gl104726

2023

10.1029/2023ef003851

2023

10.1029/2023jd039434

2023

10.5194/esd-13-201-2022

2023

The timing of these publications with respect to the award period is important because NSF researchers are only expected to add items to PAR during the award period. The articles in Table 3 that are missing from PAR were all published during the award period for award 2038246. Manual checking confirmed that these six papers directly acknowledge NSF award 2038246 in their text (Table 4), consistent with the observation that they are in CHORUS with that award number.

DOI

Acknowledgement (manual search)

10.5194/esd-2021-70

Support for Y. Zhang and D. G. MacMartin was provided by the National Science Foundation through agreement CBET-1818759 and CBET-2038246.

10.5194/acp-2022-372

National Science Foundation through agreement CBET-1818759 for DV and DGM

10.5194/acp-23-687-2023

National Science Foundation through agreement CBET-2038246 for Douglas G. MacMartin

10.1002/essoar.10509627.1

National Science Foundation through agreement CBET-1818759 and CBET-2038246.

10.5194/egusphere-2023-117

National Science Foundation (grant no. CBET-2038246)

10.1088/2515-7620/acf441

National Science Foundation through agreement CBET-1931641, as well as CBET-2038246 for DGM and BK

The Big Picture

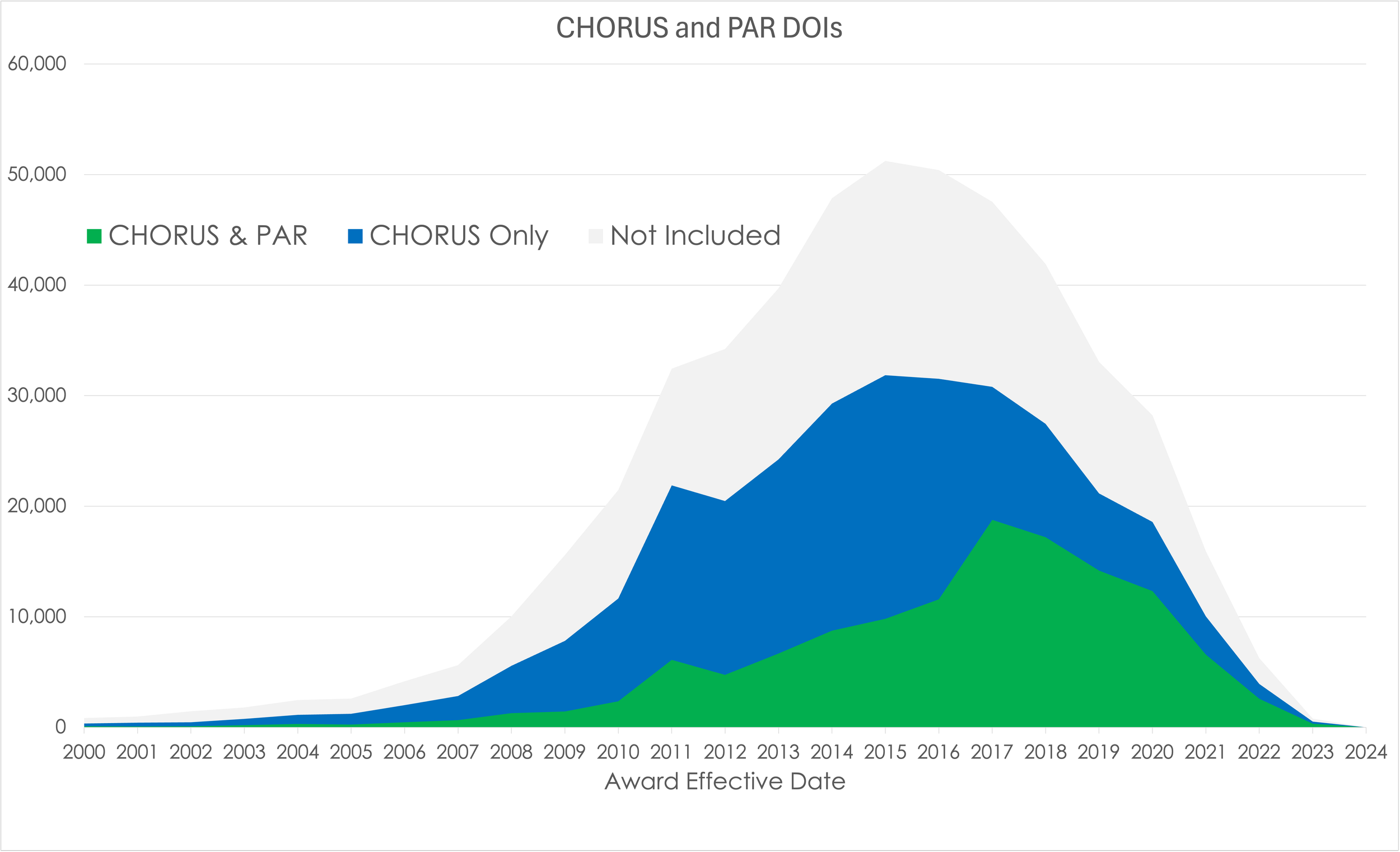

We tested 51,602 of 97,485 awards (53%) from CHORUS and found DOI matches in PAR for 32,543 of the tested awards (63%), leaving 19,059 awards that have no results published in PAR. The tested awards are associated with 307,549 DOIs in CHORUS and 127,218 (41%) of these were found in PAR. The numbers of DOIs found in PAR are shown in green in Figure 6 along with DOIs found in CHORUS and not in PAR as blue.

Figure 4. Numbers of CHORUS Awards and DOIs found in PAR for a test set of 51,602 awards.

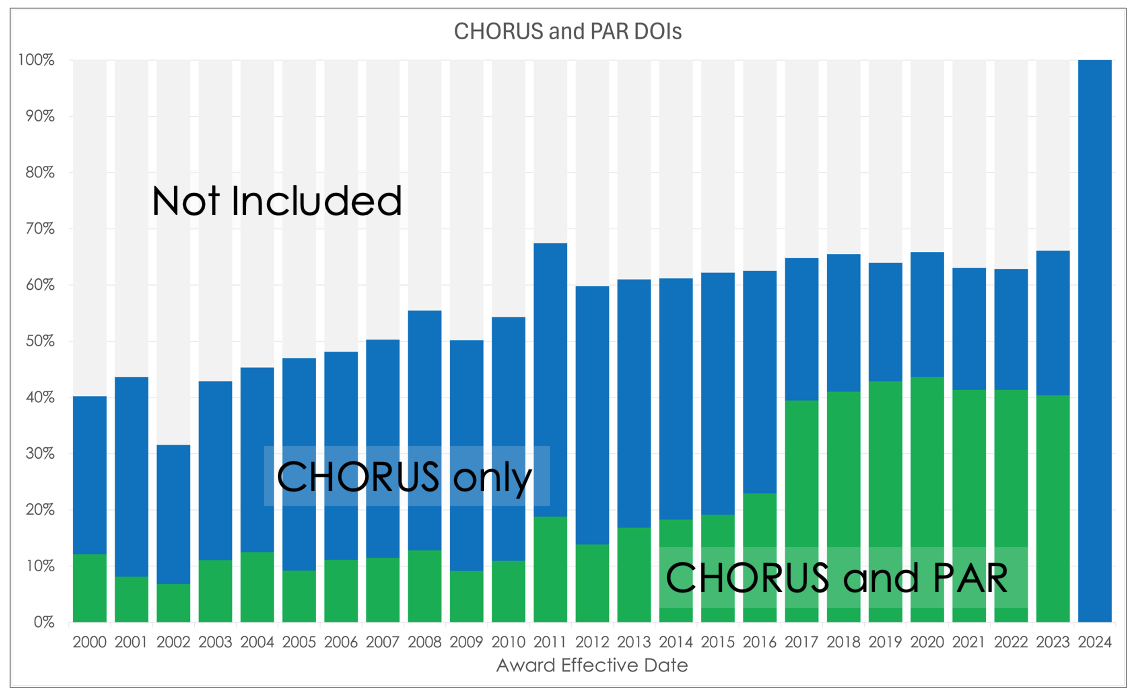

It is important to explore temporal variations of these data as their data journeys are complicated and evolve over time. Figure 7 shows relative number of DOIs in three groups: found in CHORUS and PAR (green), found in CHORUS only (blue), and not included in this test (grey) as a function of Award Effective Year. The falloff on the recent end of the data reflects the delay in publishing results or including publications in PAR for recent awards.

Figure 5. Stacked timeseries of DOIs associated with NSF awards from CHORUS and PAR, CHORUS only, and Not Included by Award Effective Date.

These data show a sharp increase in the portion of DOIs that have been included in PAR for awards effective during 2017 or later. This increase is more evident in the stacked bar plot shown in Figure 8. Between 2000 and 2016 an average 25% of the DOIs in CHORUS were also in PAR. This increased to 64% between 2017 and 2023.

Figure 6. % of DOIs / year found in CHORUS (blue), CHORUS and PAR (green) and not included in the test (grey). Note the jump in PAR coverage during 2017.

Conclusions

The NSF Public Access Repository (PAR) was created to increase access to peer-reviewed, published journal articles and juried conference papers resulting from NSF funded research and to preserve the content of those publications for future access. An important goal of this effort is to collect and preserve as much of the record as possible.

The INFORMATE Project has explored the global research infrastructure to help understand PAR coverage and explore the possibility of using that infrastructure to supplement PAR content. PAR content is provided by NSF investigators during their award periods. CHORUS reports that contain all published resources in Crossref that acknowledge NSF support were used to represent theglobal research infrastructure.

PAR is designed to support direct user interaction rather than programmatic access using an API, so an approach was developed for checking whether an award – DOI pair from CHORUS is included in PAR. This approach was used to check over 300,000 DOIs associated with ~50,000 awards. The results indicate that, for this test set, many published papers (~60%) that are funded by NSF are not included in PAR, suggesting that processes that can help those investigators could help PAR improve completeness.

The approach we have developed here can provide that help by searching the global research infrastructure to find published works and presenting those works to the investigator during the submission process for confirmation. The data presented here suggests that this could significantly improve the PAR content and, subsequently, the understanding of the impact of NSF funding.

References

Bates, J., Lin, Y.-W., and Goodale, P. (2016). Data journeys: Capturing the socio-material constitution of data objects and flows. In Big Data and Society (Vol. 3, Issue 2, p. 205395171665450). SAGE Publications. https://doi.org/10.1177/2053951716654502

Habermann, T., Jones, J., Packer, T., and Ratner, H. (2023). INFORMATE: Metadata Game Changers and CHORUS Collaborate to Make the Invisible Visible. Front Matter. https://doi.org/10.59350/yqkat-59f79