From Recommendation to Action: A New Tool for Measuring Metadata Completeness

/Ted Habermann, 0000-0003-3585-6733, Erin Robinson, 0000-0001-9998-0114, Metadata Game Changers, Lisa Curtin, 0000–0003–1137–7789, Figshare, Audrey Hamelers, 0000-0003-1555-5116, Dryad

Also available on the GREI Blog.

A new collaboration between GREI and Metadata Game Changers delivers an open-source tool to help any repository visualize, measure, and enhance metadata completeness.

The Generalist Repository Ecosystem Initiative (GREI) recently released Version 2 of the GREI Metadata Recommendations, a critical step toward supporting interoperability and discovery across the research ecosystem based on DataCite schema 4.6. Publishing a recommendation is only the beginning. The true challenge lies in implementing recommended repository changes that actively enhance the findability and reuse of data.

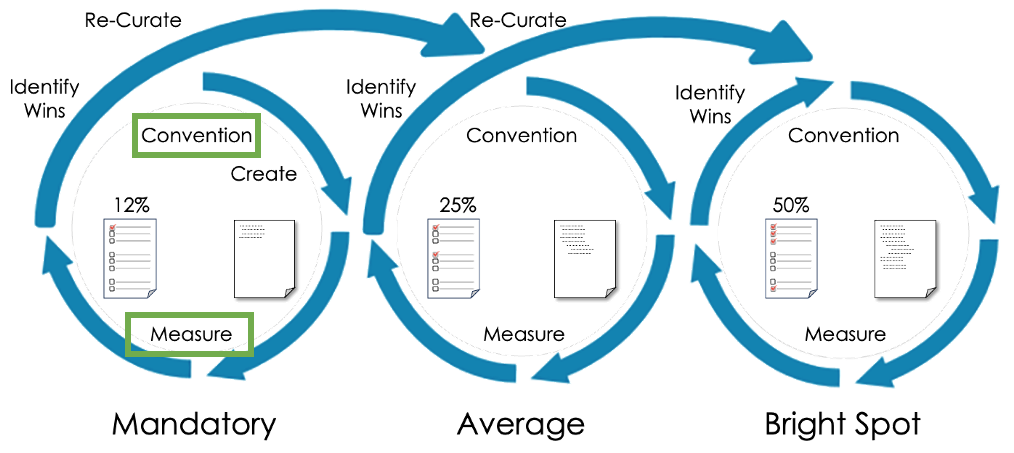

To bridge the gap between recommendation and reality, a model for continuous enhancement was needed. That is why we are introducing the metadata completeness notebook, a new open-source tool designed to help repositories visualize their current standing and drive continuous enhancement.

Figure 1. Continuous enhancement is an iterative process of planning, action, and measurement that improves metadata over time. Recommendations play the critical role of targets for these improvement iterations.

A Collaborative, NIH-Funded Partnership

Funded by the NIH Office of Data Science Strategy (Award A1OT2DB00005) through the GREI program, Metadata Game Changers partnered with the GREI repositories to develop a strategy and accompanying toolset for visualizing and enhancing metadata completeness. The project embraces the GREI model of "coopetition," encouraging repositories to work together on shared goals, such as metadata standards, while maintaining their unique identities and strengths in the broader ecosystem.

Methodology: Use Cases Over Checklists

Implementing over 50 metadata elements at once can be overwhelming. To make this manageable, the Metadata Game Changers team adopted a "Shrink the Change" approach, as described by Heath and Heath (2010), breaking large challenges into smaller, actionable pieces. Instead of a long checklist, the metadata elements were grouped into three intuitive Use Cases:

● Text Discovery: Focuses on elements that support citing resources and discovering them via text searches, such as abstracts and titles.

● Identification: Focuses on Persistent Identifiers (PIDs) that unambiguously identify researchers, funders, and organizations.

● Connections: Focuses on linking data to other research outputs, such as software, articles, and supplemental materials.

Figure 2 illustrates how these use cases can be used to measure and visualize completeness and track completeness changes over time.

Figure 2. Repository metadata improvement process driven by use cases and measurement. Tracking example from the Dryad repository. Large increases in FAIRness during 2019 are related to ROR adoption and launch of the “New Dryad”.

Introducing the Metadata Completeness Notebook

To help repositories visualize their current metadata completeness, Metadata Game Changers iterated on their pre-existing completeness tool to develop the Python notebook [1] [2] mentioned above, which uses the GREI metadata recommendation to evaluate metadata completeness against these use cases for NIH-funded resources.

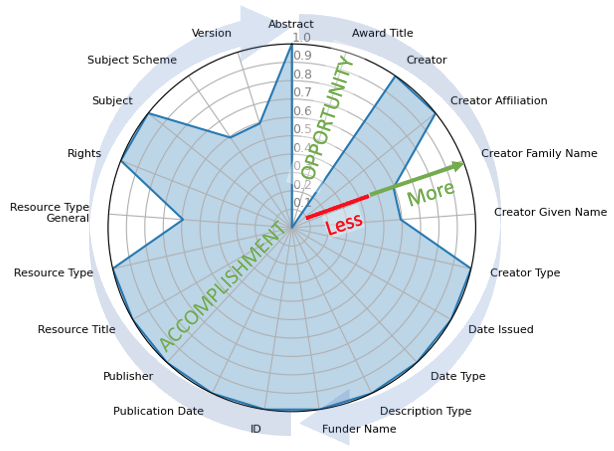

The tool moves beyond simple spreadsheets, generating "radar plots" that visualize completeness from 0% (center) to 100% (edge). This visualization strategy helps identify "Bright Spots"—areas where a repository is excelling. These bright spots serve as opportunities for the community to learn from what is working well.

Figure 3. Completeness of metadata elements in the Text Use Case. The elements are arranged around the edge of the plot with completeness going from 0% in the middle of the plot to 100% at the edge. Elements that are complete, i.e. filled to the edge of the plot, are accomplishments. Elements that are missing, or only partially filled, are opportunities for improvement.

Initial baselines established using the tool reveal that while GREI repositories are currently strong in Text Discovery, there are significant opportunities to enhance the Identifiers and Connections use cases in future iterations.

The Path to Enhancement

This work is about continuous enhancement and tracking wins over time. We invite the broader DataCite repository community to utilize this resource. If your repository includes resources funded by the NIH, you can use the openly available notebook to determine your repository’s metadata completeness and identify your own bright spots. If your repository does not include resources funded by NIH, try the more general FAIR notebook.

By leveraging these tools and maintaining the collaborative spirit of the GREI program, we can collectively drive the evolution of the open data ecosystem so datasets can more easily be found and reused across repositories to further scientific discovery.

References

Heath, C. and Heath, D., (2010). Switch: How to Change Things When Change Is Hard, Crown Currency, https://heathbrothers.com/books/switch/.

Engage with the GREI Community

The GREI GitHub Discussion Board is an easy way to engage with the generalist repositories. Please join the GREI Google Group to receive updates on GREI activities and events, as well as the latest posts on the GREI blog. All GREI resources including recordings and slides from past events and guides are publicly available in the GREI Community on Zenodo.

About GREI

The Generalist Repository Ecosystem Initiative (GREI) is a U.S. National Institutes of Health (NIH) initiative which has brought seven generalist repositories together into a collaborative working group focused on establishing “a common set of cohesive and consistent capabilities, services, metrics, and social infrastructure” to enhance NIH data sharing and reuse and increasing awareness and adoption of the FAIR principles.